Despite the NVD (National Vulnerability Database) outage of the NIST (National Institute of Standards and Technology), Greenbone’s detection engine remains fully operational, offering reliable, vulnerability scanning without relying on missing CVE enrichment data.

Since 1999 The MITRE Corporation’s Common Vulnerabilities and Exposures (CVE) has provided free public vulnerability intelligence by publishing and managing information about software flaws. NIST has diligently enriched these CVE reports since 2005; adding context to enhance their use for cyber risk assessment. In early 2024, the cybersecurity community was caught off guard as the NIST NVD ground to a halt. Now roughly one year later, the outage had not been fully resolved [1][2]. With an increasing number of CVE submissions each year, NIST’s struggles have left a large percentage without context such as a severity score (CVSS), affected product lists (CPE) and weakness classifications (CWE).

Recent policy shifts pushed by the Trump administration have created further uncertainty about the future of vulnerability information sharing and the many security providers that depend upon it. The FY 2025 budget for CISA includes notable reductions in specific areas such as a 49.8 million Dollar decrease in Procurement, Construction and Improvements and a 4.7 million Dollar cut in Research and Development. In response to the funding challenges, CISA has taken actions to reduce spending, including adjustments to contracts and procurement strategies.

To be clear, there has been no outage of the CVE program yet. On April 16, the CISA issued a last minute directive to extend its contract with MITRE to ensure the operation of the CVE Program for an additional 11 months just hours before the contract was set to expire. However, nobody can predict how future events will unfold. The potential impact to intelligence sharing is alarming, perhaps signaling a new dimension to a “Cold Cyberwar” of sorts.

This article includes a brief overview of how the CVE program operates, and how Greenbone’s detection capabilities remain strong throughout the NIST NVD outage.

An Overview of the CVE Program Operations

The MITRE Corporation is a non-profit tasked with supporting US homeland security on multiple fronts including defensive research to protect critical infrastructure and cybersecurity. MITRE operates the CVE program, acting as the Primary CNA (CVE Numbering Authority) and maintaining the central infrastructure for CVE ID assignment, record publication, communication workflows among all CNAs and ADPs (Authorized Data Publishers) and program governance. MITRE provides CVE data to the public through its CVE.org website and the cvelistV5 GitHub repository, which contains all CVE Records in structured JSON format. The result has been highly efficient, standardized vulnerability reporting and seamless data sharing across the cybersecurity ecosystem.

After a vulnerability description is submitted to MITRE by a CNA, NIST has historically added:

- CVSS (Common Vulnerability Scoring System): A severity score and detailed vector string that includes the risk context for Attack Complexity (AC), Impact to Confidentiality (C), Integrity (I), and Availability (A), as well as other factors.

- CPE (Common Platform Enumeration): A specially formatted string that acts to identify affected products by relaying the product name, vendor, versions, and other architectural specifications.

- CWE (Common Weakness Enumeration): A root-cause classification according to the type of software flaw involved.

CVSS allows organizations to more easily determine the degree of risk posed by a particular vulnerability and strategically conduct remediation accordingly. Also, because initial CVE reports only require a non-standardized affected product declaration, NIST’s addition of CPE allows vulnerability management platforms to conduct CPE matching as a fast, although somewhat unreliable way to determine whether a CVE exists within an organization’s infrastructure or not.

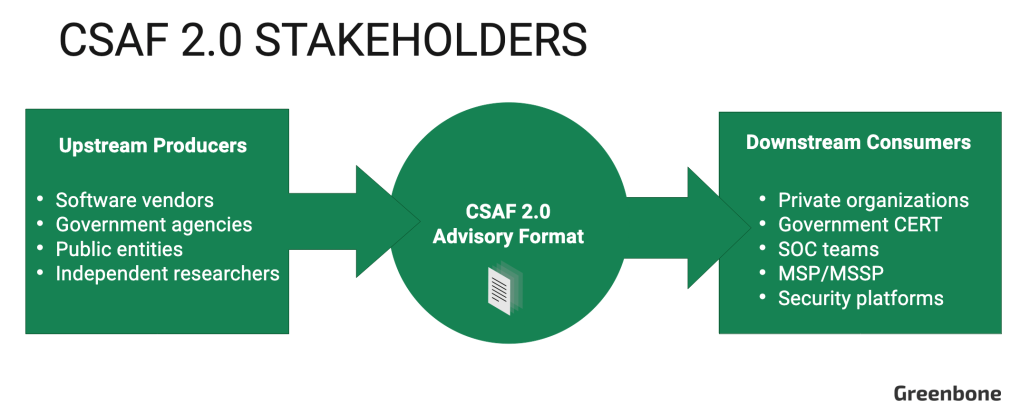

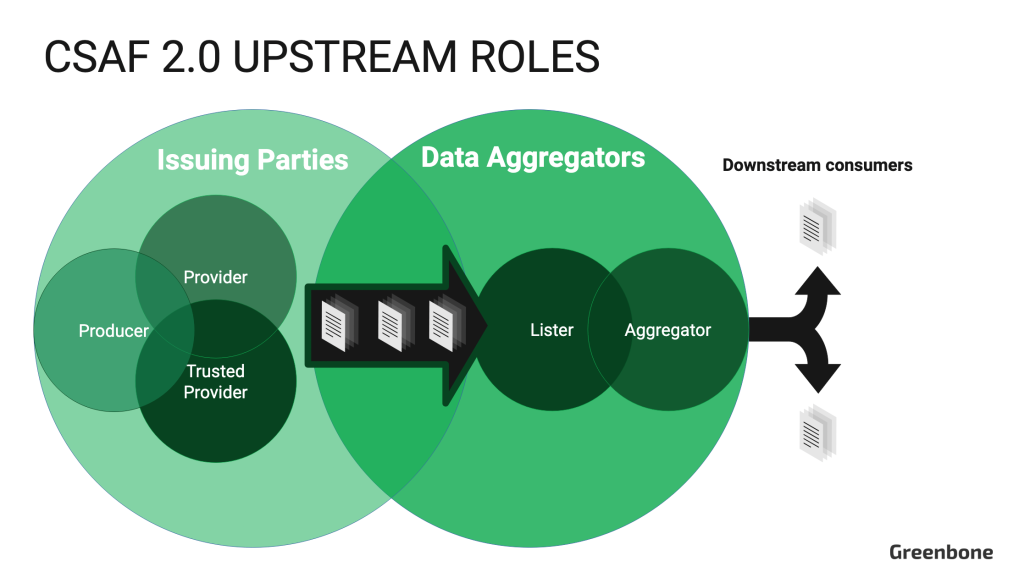

For a more detailed perspective on how the vulnerability disclosure process works and how CSAF 2.0 offers a decentralized alternative to MITRE’s CVE program, check out our article: How CSAF 2.0 Advances Automated Vulnerability Management. Next, let’s take a closer look at the NIST NVD outage and understand what makes Greenbone’s detection capabilities resilient against the NIST NVD outage.

The NIST NVD Outage: What Happened?

Starting on February 12, 2024, the NVD drastically reduced its enrichment of Common Vulnerabilities and Exposures (CVEs) with critical metadata such as CVSS, CPE and CWE product identifiers. The issue was first identified by Anchore’s VP of Security. As of May 2024, roughly 93% of CVEs added after February 12 were unenriched. By September 2024, NIST had failed to meet its self-imposed deadline; 72.4% of CVEs and 46.7% of new additions to CISA’s Known Exploited Vulnerabilities (KEVs) were still unenriched [3].

The slowdown in NVD’s enrichment process had significant repercussions for the cybersecurity community not only because enriched data is critical for defenders to effectively prioritize security threats, but also because some vulnerability scanners depend on this enriched data to implement their detection techniques.

As a cybersecurity defender, it’s worthwhile asking: was Greenbone affected by the NIST NVD outage? The short answer is no. Read on to find out why Greenbone’s detection capabilities are resilient against the NIST NVD outage.

Greenbone Detection Strong Despite the NVD Outage

Without enriched CVE data, some vulnerability management solutions become ineffective because they rely on CPE matching to determine if a vulnerability exists within an organization’s infrastructure. However, Greenbone is resilient against the NIST NVD outage because our products do not depend on CPE matching. Greenbone’s OPENVAS vulnerability tests can be built from un-enriched CVE description. In fact, Greenbone can and does include detection for known vulnerabilities and misconfigurations that don’t even have CVEs such as CIS compliance benchmarks [4][5].

To build Vulnerability Tests (VT) Greenbone employs a dedicated team of software engineers who identify the underlying technical aspects of vulnerabilities. Greenbone does include a CVE Scanner feature capable of traditional CPE matching. However, unlike solutions that rely solely on CPE data from NIST NVD to identify vulnerabilities, Greenbone employs detection techniques that extend far beyond basic CPE matching. Therefore, Greenbone’s vulnerability detection capabilities remain robust even in the face of challenges such as the recent outage of the NIST NVD.

To achieve highly resilient, industry leading vulnerability detection, Greenbone’s OPENVAS Scanner component actively interacts with exposed network services to construct a detailed map of a target network’s attack surface. This includes identifying services that are accessible via network connections, probing them to determine products, and executing individual Vulnerability Tests (VT) for each CVE or non-CVE security flaw to actively verify whether they are present. Greenbone’s Enterprise Vulnerability Feed contains over 180,000 VTs, updated daily, to detect the latest disclosed vulnerabilities, ensuring rapid detection of the newest threats.

In addition to its active scanning capabilities, Greenbone supports agentless data collection via authenticated scans. Gathering detailed information from endpoints, Greenbone evaluates installed software packages against issued CVEs. This method provides precise vulnerability detection without depending on enriched CPE data from the NVD.

Key Takeways:

- Independence from enriched CVE data: Greenbone’s vulnerability detection does not rely on enriched CVE data provided by NIST’s NVD, ensuring uninterrupted performance during outages. A basic description of a vulnerability allows Greenbone’s vulnerability test engineers to develop a detection module.

- Detection beyond CPE matching: While Greenbone includes a CVE Scanner feature for CPE matching, its detection capabilities extend far beyond this basic approach, utilizing several methods that actively interact with scan targets.

- Attack surface mapping: The OPENVAS Scanner actively interacts with exposed services to map network attack surface, identifying all network reachable services. Greenbone also performs authenticated scans to gather data directly from endpoint internals. This information is processed to identify vulnerable packages. Enriched CVE data such as CPE is not required.

- Resilience to NVD enrichment outages: Greenbone’s detection methods remain effective even without NVD enrichment, leveraging CVE descriptions provided by CNAs to create accurate active checks and version-based vulnerability assessments.

Greenbone’s Approach is Practical, Effective and Resilient

Greenbone exemplifies the gold standard of practicality, effectiveness and resilience, achieving a benchmark that IT security teams should be striving to achieve. By leveraging active network mapping, authenticated scans and actively interacting with target infrastructure, Greenbone ensures reliable, resilient detection capabilities in diverse environments.

This higher standard enables organizations to confidently address vulnerabilities, even in complex and dynamic threat landscapes. Even in the absence of NVD enrichment, Greenbone’s detection methods remain effective. With only a general description Greenbone’s VT engineers can develop accurate active checks and product version-based vulnerability assessments.

Through a fundamentally resilient approach to vulnerability detection, Greenbone ensures reliable vulnerability management, setting itself apart in the cybersecurity landscape.

NVD / NIST / MITRE Alternatives

The MITRE issue is a wake-up call for digital sovereignty, and the EU has already (and fast) reacted. A long-awaited alternative, the EuVD by the ENISA, the European Union Agency for Cybersecurity, is there, and will be covered in one of our upcoming blog posts.